Mojeek Search Summaries

If you take from the web, you should give back. Search engines like Google, Bing and Mojeek do that with hyperlinks; sending traffic back to the web-pages which they have crawled and indexed. The voluntary agreement underpinning them, expressed through website robots.txt files, has been based on the legal concept of fair usage.

Generative AI products started to break this principle, notably with chatbots based on Large Language Models (LLMs). The breakout success of ChatGPT started a commercial race, which has accelerated this process. It’s an issue that both its creator and publishers are extremely concerned about, as can be seen from the many lawsuits and data agreements being fought over.

We support the open web and have concerns about the current trend as we have discussed before. It is also why we proposed the NoML meta tag. Importantly at Mojeek we have always played fair, respecting robots.txt, and simply providing links back to the websites that allow us to crawl.

Still we do recognise that, despite their inherent tendency to hallucinate, LLMs can offer convenience and help with efficient research. They can be very useful for providing summaries during informational discovery and learning.

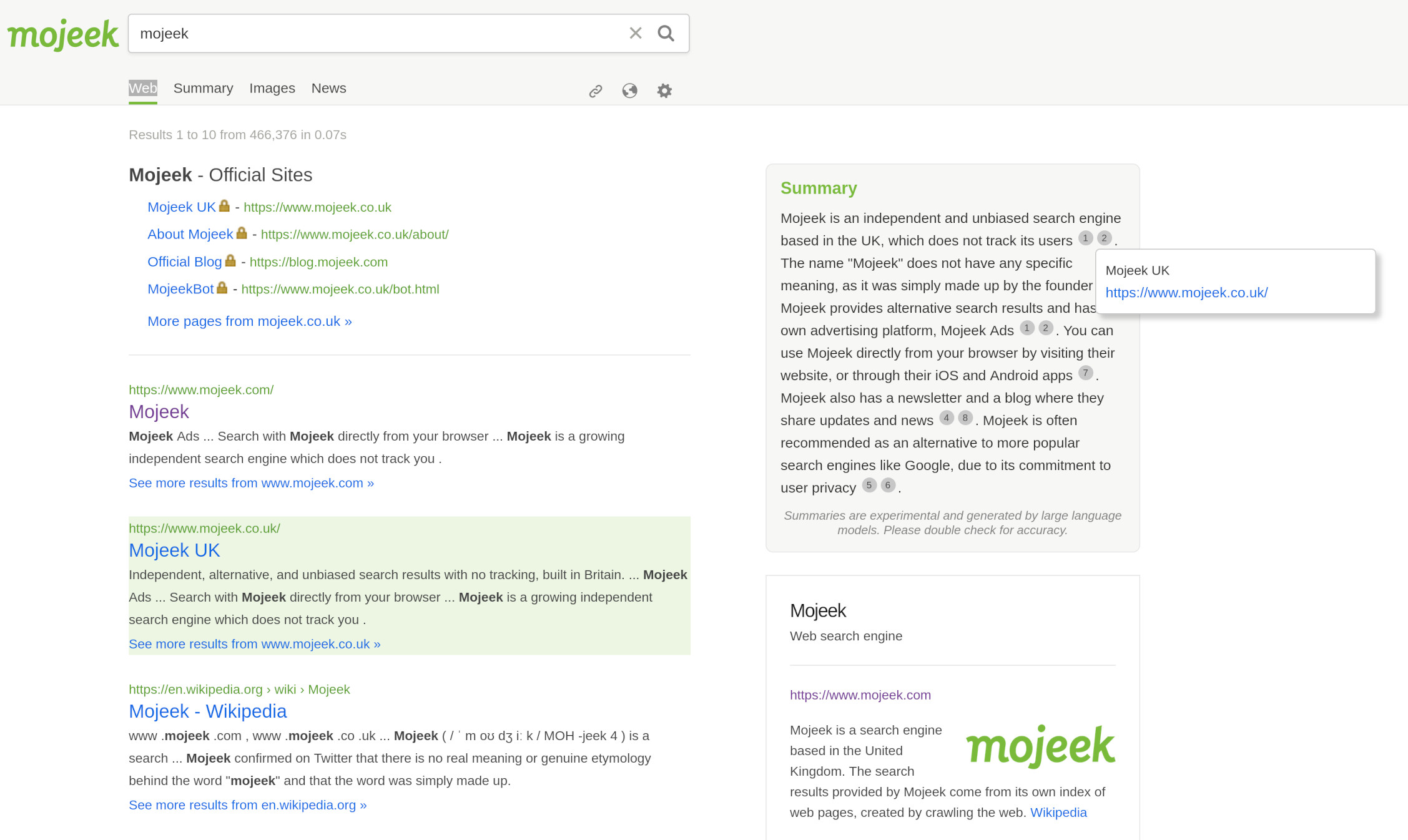

So what if you combine them? Provide search results and LLM generated summaries. We decided to offer summaries as an option in Mojeek. As you can see in the examples below, we offer this in two ways: firstly for “Web” as an optional summary to the right of the search results (on desktop):

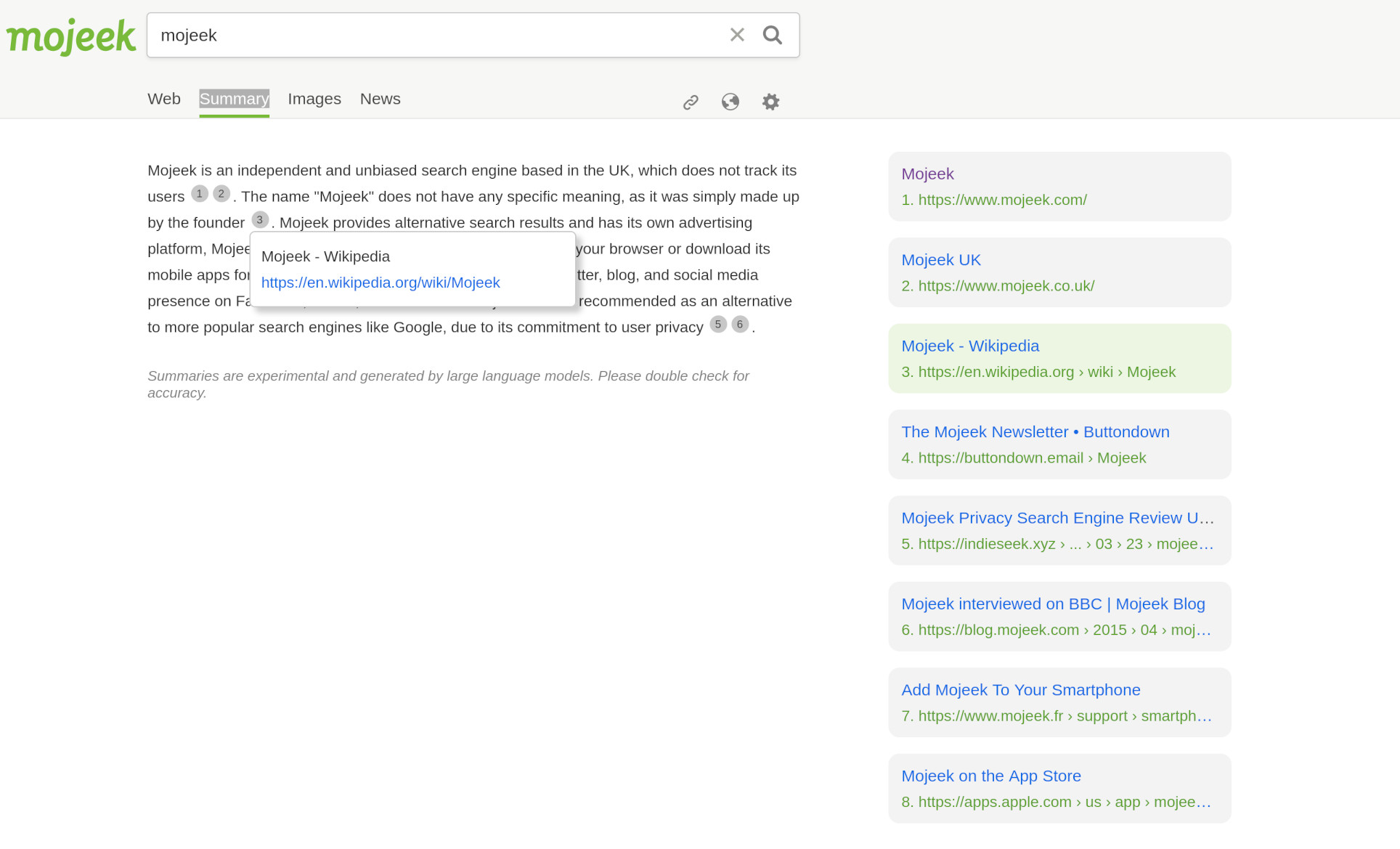

secondly as a “Summary” with search result hyperlinks to the right:

What’s fundamentally important is that these summaries have citations, which are themselves links back to the traditional search results. This both supports the web, and enables easy checking of the summary text. As you hover over a citation you get a hyperlink and the corresponding search result highlighted in light green.

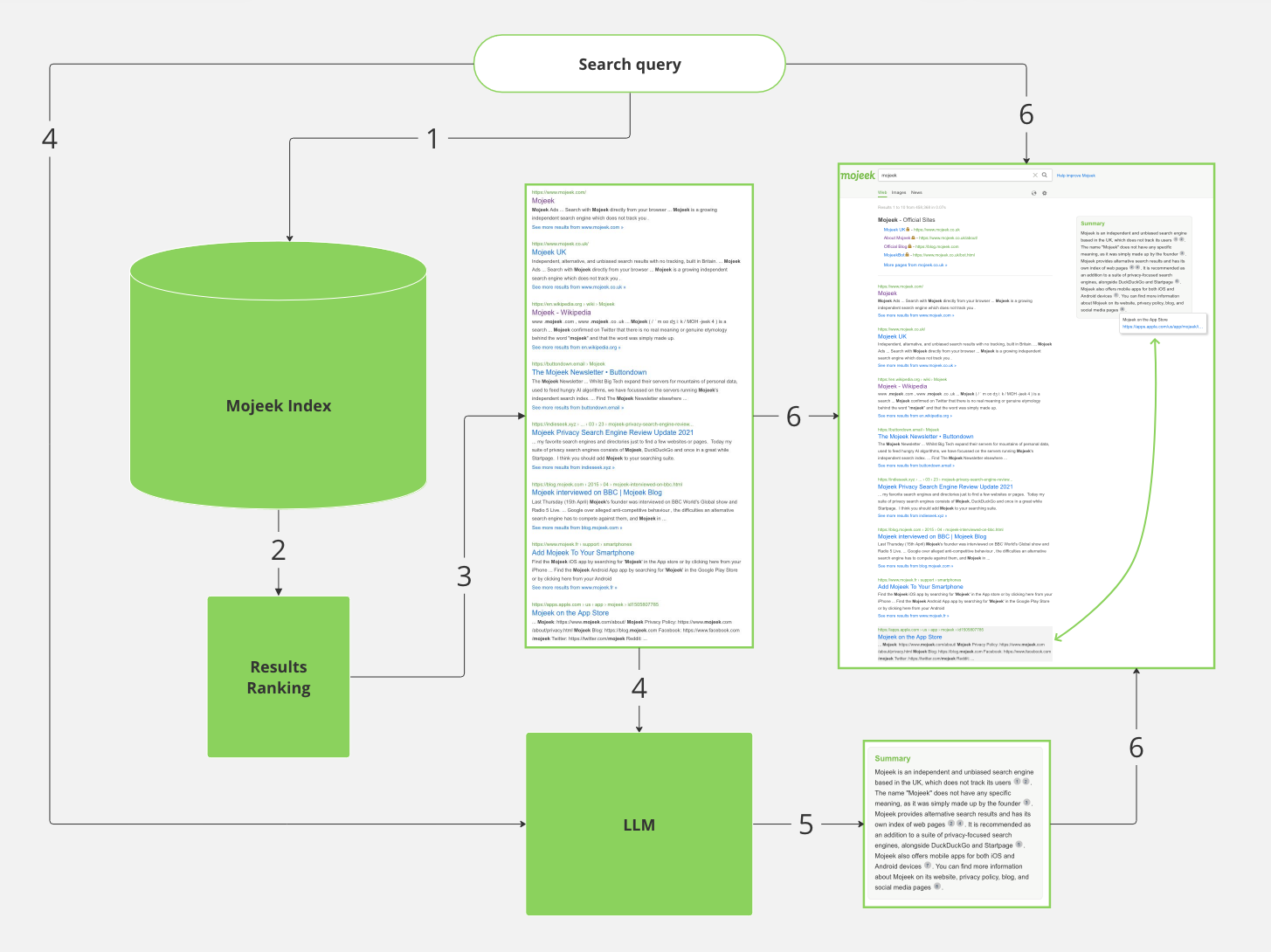

We show how the architecture works below, and with these steps:

- The search query is sent to the Mojeek index

- Candidate relevant pages are retrieved and sent for ranking

- The top search results are extracted

- Data from the top search results are sent to the LLM, along with the search query

- The LLM generates a summary based on the search query, and the Mojeek search results

- The query, results and LLM summary are displayed on the search results page

The process for generating summaries is known as RAG (Retrieval-Augmented Generation). For the LLM we are presently using Mixtral (via Lepton), a model not from Big Tech but a French AI startup.

As you would expect with Mojeek you can choose to turn these summaries on or off. And of course, no tracking is involved.

As of today, all Mojeek users can try it out on the Web tab here, and in the new Summary tab here.